In a rapidly evolving technology sector, AI and accessibility testing are converging and influencing the development and experience of digital environments. Artificial intelligence is fueling productivity, but it is also enhancing accessibility for people with disabilities in order to create a better user experience, intersectionally expanding access, broadening interaction and navigation, and unlocking new doorways for millions of people.

We see the influence of AI with respect to long-standing digital barriers, such as AI-generated captions, smart screen readers, and sophisticated image recognition, as examples of how AI is dismantling barriers. With our lives increasingly shifting online, inclusive, accessible experiences are critical rather than optional.

Digital Accessibility Landscape: Where Do We Stand Today?

Digital accessibility is about designing and developing digital platforms that everyone can use—people experiencing physical, sensory, and cognitive challenges. While accessibility is gaining attraction, it is still being developed. There are still numerous websites, applications, and tools that users may find difficult or even be unable to engage with, even when relying on assistive technologies.

According to the World Health Organization, over 1 billion people (around 15% of the global population) experience some form of disability. A 2023 online audit of websites demonstrated that 96.3% of home pages surveyed contained at least one issue with accessibility. Accessibility challenges can include anything that could be a blocker for assistive technologies like screen readers. For example, videos containing no captioning, mislabeling, or poor color contrast.

The data is clear: technology evolves very quickly, and accessibility is lagging behind. The emergence of AI offers an exciting opportunity to help make up that gap.

Exploring AI and Accessibility

AI embedded into digital platforms has the power of technology that can see, feel, assess, and respond to human diversity. AI goes beyond typical programming with its anticipatory modeling and decision-making on the fly. Unlike standard programming methodologies, which embody the structure of rules at their core, AI learns from data and adapts, creating opportunities for accessibility via dynamic and personalized allocation of resources.

Here’s an overview of how AI provides a more inclusive digital experience:

Cognitive Abilities

AI systems can understand and interpret images, text, and speech to provide a more accessible digital experience. For example, AI could:

- Auto-generate image descriptions (alt text) using computer vision.

- Detect elements on screen and read them back to individuals.

- Real-time spoken word transcription into words.

Predictive Capabilities

Using machine learning, AI tools can predict the user’s needs based on past behaviors and provide advance suggestions and adjustments. Think of predictive text, voice-driven interfaces, or providing dynamic font resizing when individuals interact with text.

Personalization

AI can provide a digital presentation customized to the individual user and their accessibility considerations—contrast adjustments, simplified layout, and navigating styles to make it more accessible.

Continuous Learning

Unlike static tools, AI systems can learn with time. They increase their ability to understand users and become better at refining and improving the recommendations they provide and their impact on accessibility.

AI-Driven Solutions Making a Difference

Now, we should see several examples of some important AI-powered solutions already trying to make a difference in the sphere of accessibility:

Voice Assistants and Conversational Interfaces

The innovation of virtual assistants driven by AI, such as Siri, Google Assistant, and Alexa, has changed the experience in which users affected by motor or vision impairments can use their devices. These applications enable one to run commands, retrieve information, and manage the smart appliances with voice only.

Voice commands have allowed a person with limited mobility to turn on lights and write an email or call a friend and get the level of independence that was otherwise difficult to achieve.

Speech Recognition and Real-Time Captioning

Speech recognition tools such as Google Live Transcribe and Microsoft real-time captions are doing amazing things for communication within the deaf or hard-of-hearing community. With the use of AI, these apps faithfully transcribe the spoken word into text and are even able to adjust to pronunciations along with background noise.

This technology can be used in meetings, for classrooms, or with video materials, further adding to modes of interaction and engagement.

Computer Vision for the Visually Impaired

Applications like Seeing AI, Be My Eyes are leveraging computer vision and deep learning technologies to share information with users who have little to no sight or ability to see. They can identify objects, read, and recognize faces, and even identify how someone is feeling based on their facial expressions.

These abilities ultimately can help create real-time situational awareness of surroundings and change how those who are blind experience interactions.

Automatic Alt text and Image Description

Facebook and Instagram use AI to create an automatic alt text description for images with the help of OCR, so that if someone uses a screen reader, it will reference the image. Microsoft’s Azure Cognitive Services uses image recognition APIs and automatically creates image tags as a form of description.

This specific automatic capability is especially important, as those who rely on screen readers are often not able to access non-text content—like illustrations or infographics. Automated alt text creation is one way to ensure accessibility even when creators forget or overlook it.

READ ALSO: How to Check if Content Was Written by AI

AI in Inclusive Design and Development

AI is not only used in end-user tools—it’s increasingly playing a role in how designers and developers create accessible experiences from the start.

AI in Inclusive Design

AI can help UX/UI teams identify non-obvious accessibility issues by monitoring user behavior (excessive zooms and navigation troubles), meaning they’re able to create more inclusive designs from the outset.

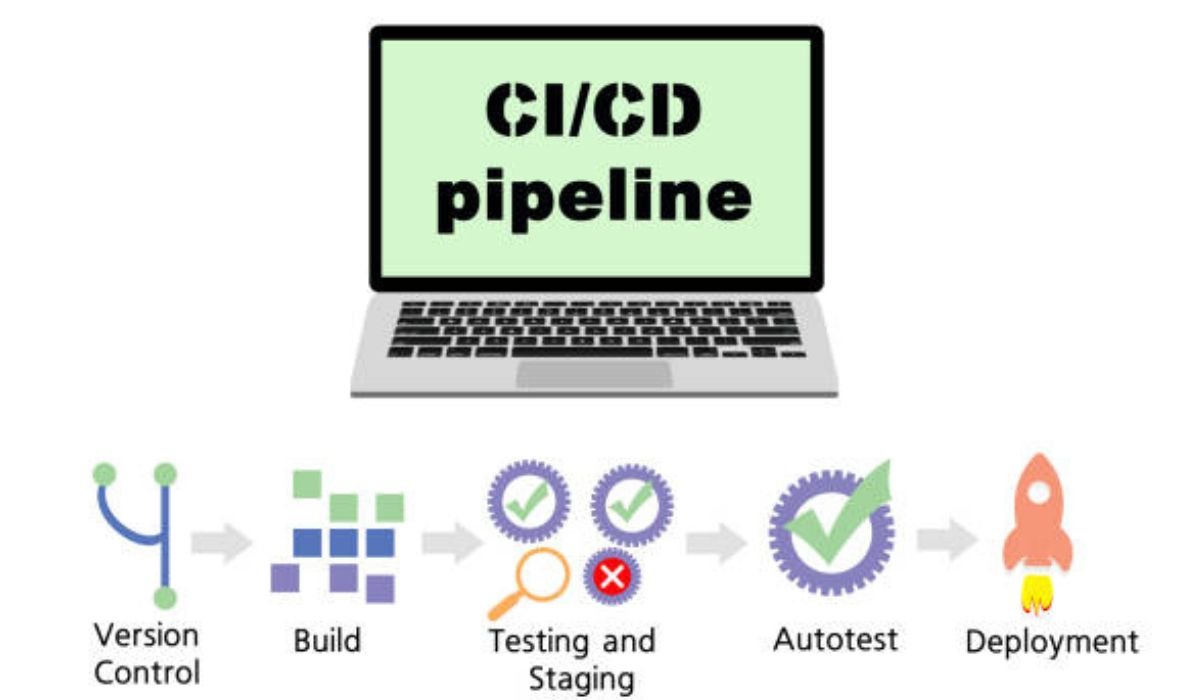

AI in Accessibility Testing Tools

Currently, accessibility testing tools like Google Lighthouse and axe-core have a number of AI capabilities in addition to identifying issues such as low contrast, missing or invalidly labeled buttons, etc.

AI tools are even helping to prioritize fixes, provide suggestions on how to make accessibility more usable, and simulate how people with disabilities access websites, which improves the speed and accuracy of accessibility testing.

Platforms such as LambdaTest take this a step further and provide accessibility testing with integrated accessibility testing as part of an all-in-one testing cloud. With built-in axe-core integration, diverse browser and device support, and intelligent visual regression tracking, LambdaTest enables teams to quickly find and rectify accessibility issues at scale. LambdaTest also enables parallel testing to validate accessibility across different environments, which is crucial for providing effective, scalable accessibility solutions.

Artificial Intelligence and Inclusive Education

AI is already being used in inclusive education, and there’s a great deal of potential for it to create even more accessibility and individualized learning. With AI, the personal experience for learners can become further personalized in ways that eliminate barriers that previously restricted equitable access, while improving the ability for learners to access an excellent education.

Some examples include:

- Text-to-speech tools that can read a textbook word for word for a student that has a visual impairment or learning disability like dyslexia.

- AI tutors provide one-on-one help at a pace that suits the learner’s level of understanding and learning pace.

- AI visual recognition that can recognize handwritten math problems and convert them into digital formats, allowing the learner to interact with the questions using text and produce feedback for this task while marking it as the student’s work.

Language translation and AI sign language recognition for multilingual learners or learners relying on ASL for communication.

Altogether these tools help provide equitable academic experiences for learners so that no student experiences barriers because of a disability.

The Role of AI on Accessibility in the Workplace

Accessibility is not just important for schools and the internet; it is important in the workplace as well. AI is being used more and more in enterprise systems to help employees with various abilities.

For example, AI transcription tools can help employees who are deaf or hard of hearing participate in meetings. Predictive text can help employees with motor disabilities to type faster. Smart scheduling tools can help staff all employees to schedule meetings around their energy levels or health restraints. AI is also being used in onboarding platforms to help hiring managers reduce bias by focusing on skills rather than people.

Ethics and Challenges

There is real promise in using AI for accessibility, but you should also consider important ethical concerns:

- Bias of the Training Data: AI tools will perpetuate exclusion if they do not include people with disabilities in their training datasets.

- Privacy: AI systems that track voice, face, or physical tendencies may present privacy concerns if they are not used appropriately.

- Over-automation: We can use AI to automate alt text or captioning, but it cannot fully replace human judgment.

- The Accessibility of AI Accessibility: Ironically, some AI based accessibility tools are not designed by default, for all users.

What’s Ahead

There are many exciting possibilities for the future:

- Emotion-aware AI that responds to emotions.

- Real-time AI sign language interpretations.

- Brain-computer interfaces (BCIs) that allow digital interaction without hands.

- AI that’s able to personalize interfaces according to individual preferences.

Conclusion: Creating an Inclusive Digital Future with AI

AI is not perfect, but it can create opportunities for greater participation for people with disabilities when used correctly. It can provide access to basic accessibility features and provide a huge level of inclusion for an individual.

However, with opportunity comes responsibility. While we may want equity for all users of the digital world, we need to be sure that the systems we’re designing through AI are ethical, inclusive, and representative of their entire user base. Accessibility and inclusive practice need to be integrated into the baseline process for digital innovation and not be a subsequent consideration.

In practice, tools like Selenium Chromedriver can help validate accessibility and user interactions across browsers and devices, enabling teams to integrate inclusive testing into automated workflows. By engaging AI with intent and empathy, combined with robust automation frameworks, we can create a digital environment where everyone has the opportunity to engage, contribute, and thrive.

YOU MAY ALSO LIKE: Quartist: Unleashing Creativity with AI-Powered Art Generation