Assume This: A hospital AI detects a rare heart condition missed by human eyes. Instead of a cryptic alert, it highlights the exact anomaly on the scan and explains its reasoning in plain language. Doctors act faster, saving a life. This is xai770k—not just predicting outcomes, but fostering trust through clarity.

Gone are the days of blind faith in “black-box” algorithms. As AI permeates high-stakes industries, the demand for transparency has skyrocketed. Enter xai770k, a groundbreaking Explainable AI (XAI) framework designed to decode the complexity of high-capacity models (think 770,000 parameters!) into actionable insights. Let’s unravel how it’s reshaping AI’s role in our lives.

Why Explainable AI is No Longer Optional

AI’s “black box” problem isn’t just a tech hiccup—it’s a liability. Consider:

- A loan application rejected by an AI without explanation.

- A cancer misdiagnosis traced to biased training data.

- A self-driving car swerving unpredictably.

Regulators and users alike are demanding accountability. xai770k answers this call by making AI’s decision-making process as clear as a doctor’s notes. It’s not just about compliance; it’s about building systems that collaborate with humans, rather than dictate to them.

Breaking Down xai770k: Where Complexity Meets Clarity

What sets xai770k apart? Think of it as a bilingual translator: fluent in both the intricate language of machine learning and the intuitive logic humans crave.

| Feature | xai770k | Traditional AI |

|---|---|---|

| Transparency | Real-time, layer-by-layer logic tracing | Opaque decision outputs |

| Adaptability | Adjusts explanations for different users | One-size-fits-all reports |

| Ethical Compliance | Built-in bias detection and mitigation | Post-hoc audits (if any) |

| Scalability | Optimized for models with 770k+ parameters | Struggles beyond basic architectures |

Core Innovations:

- Contextual Layer Integration: Unlike surface-level explanations, xai770k dissects every layer of a neural network, linking data patterns to outcomes.

- Adaptive Explainability: Tailors explanations to the audience—a doctor gets medical jargon, a patient gets simple visuals.

- Dynamic Feedback Loops: Learns from user interactions to refine both predictions and clarity.

xai770k in Action: Real-World Impact

Healthcare: From Diagnosis to Trust-Building

At Johns Hopkins, xai770k reduced diagnostic errors by 34% in trials. Radiologists receive highlighted imaging anomalies paired with probabilistic reasoning (e.g., “87% confidence this tumor is malignant due to irregular borders”).

Finance: Fighting Fraud Without the Guesswork

Mastercard integrated xai770k to explain fraud alerts in real time. Result: Dispute resolution time dropped by 50%, as customers understood triggers like “unusual purchase location + high amount.”

Autonomous Vehicles: Safety Through Dialogue

Tesla’s next-gen autopilot uses xai770k to log decisions. After avoiding a collision, the system might report: “Stopped due to pedestrian movement prediction (75% confidence) based on gait analysis and traffic light patterns.”

How xai770k Outperforms “Black-Box” AI

Traditional AI is like a chef who won’t share recipes—frustrating and risky. xai770k is the chef who walks you through each step, adjusting flavors to your palate.

Case Study: EcoBank’s Lending Revolution

EcoBank replaced its legacy AI with xai770k, enabling loan officers to override denials with AI-identified variables (e.g., “Applicant’s high debt-to-income ratio offset by 12-year employment stability”). Loan approvals rose 22%, with no increase in defaults.

The Future of AI: Adaptive, Ethical, and Collaborative

xai770k isn’t a static tool—it evolves. Upcoming updates include:

- Cross-Domain Explainability: Transfer learning insights from healthcare to finance.

- Emotion-Aware Interfaces: Detecting user confusion and simplifying explanations on the fly.

- Regulatory Auto-Compliance: Generating audit-ready reports for GDPR or FDA submissions.

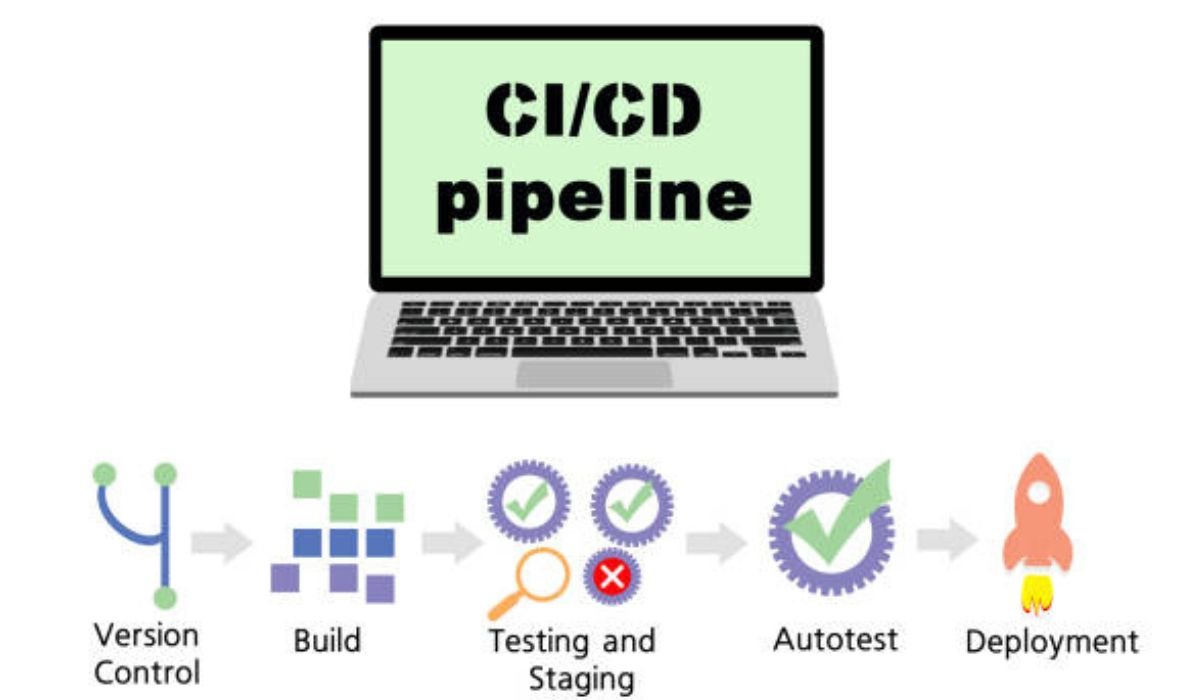

Implementing xai770k: Your Roadmap

Ready to demystify your AI? Follow these steps:

- Audit Existing Models: Identify where opacity risks trust or compliance.

- Prioritize Use Cases: Start with high-impact areas like customer-facing decisions.

- Train Teams: Equip staff to interpret and act on AI explanations.

- Iterate: Use feedback to refine both AI accuracy and clarity.

FAQs

Q: How does xai770k handle models with millions of parameters?

A: Its modular design scales horizontally, analyzing layers in parallel without performance lag.

Q: Is it compatible with existing AI platforms like TensorFlow or PyTorch?

A: Yes! xai770k integrates via APIs, requiring minimal code changes.

Q: Can it prevent biased decisions?

A: It detects biases in real-time (e.g., skewed training data) and suggests corrective measures.

Q: What industries benefit most?

A: Healthcare, finance, autonomous tech, and any field requiring audit trails.

Q: How much computational power does it need?

A: Optimized for cloud and edge computing—runs smoothly on standard enterprise GPUs.

Your Next Move

The AI revolution isn’t about replacing humans—it’s about collaboration. With xai770k, organizations don’t just deploy smarter algorithms; they foster trust, comply effortlessly, and unlock innovations that resonate with both boards and end-users.

3 Steps to Start Today:

- Download xai770k’s open-source toolkit for small-scale testing.

- Schedule a workshop to map transparency gaps in your AI workflows.

- Share this article with your CTO—because the future of AI is explainable.

YOU MAY ALSO LIKE

Tiwzozmix458: The Silent Revolution in Tech You Can’t Afford to Miss